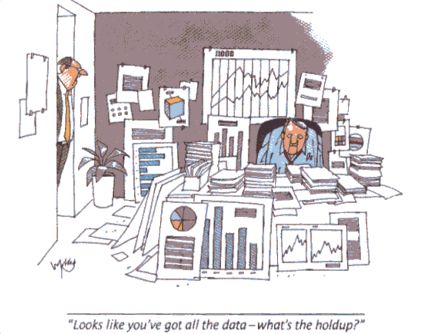

I was tempted to title this, “Metrics, metrics, everywhere, but not a solution in sight,” as a twist on the famous line in Coleridge’s “The Rime Of The Ancient Mariner.”

We are drowning in data. We live in a world where tools, including AI, can provide endless analyses and insights into the data. We establish endless metrics that help us track trends and performance. We inflict endless, sometimes contradictory metrics on our people, mostly overwhelming and confusing them.

We live by the numbers–or worse, by the metrics–thinking they are the answers to all our problems and challenges.

But they aren’t!

At best they help us identify problems, challenges, opportunities. They might show us something is off. But they never tell us why something is happening or what do do about it.

At worst, and more typically, they distort behavior. Rather than focusing on the work that needs to be done, we shift to work that satisfies the metrics. Activity becomes less focused. Rather than looking at activities that drive outcomes, we focus on the activities themselves. Numbers of meetings become the goal, but what’s accomplished in those meetings is lost. More meetings is better, when fewer meetings might have greater impact.

I was struck by a post with someone addressing the issue, “How do you know if your coaching is working?” It’s an important question–one too few managers actually think about. The problem is, this individual was proposing 21 metrics to prove your coaching is having an impact. I’ve spent much of my career coaching leaders and individual contributors. I’ve spent much of it teaching leaders how to coach with even greater impact. I’ve even written a little about it. But I can’t imagine 21 metrics to track whether your coaching is having an impact. But I can imagine how our quest to hit those goals actually divert us from why coaching is the most important use of our time.

And then I think of piling these 21 metrics on top of all the other metrics managers track and the metrics their managers use to track them.

So we are overwhelmed and overloaded with metrics. And the key issue is they have the tendency to divert us from understanding what is really happening and addressing those issues. Or possibly, they give us an excuse for not diving in and really solving the problems. We focus on the metric and not the problem–that’s much tougher.

“Metrics are the smoke detector, not the fire extinguisher.” (Thank you ChatGPT.)

Our job is to maximize the performance of everyone in the organization, including our own performance. It is to assure we are doing everything possible to achieve the goals/outcomes expected of us.

Metrics and associated goals help us understand where we might be off target, where we aren’t achieving what we had hoped to achieve. They are the alarm that tells you something isn’t happening.

Used well, metrics should spark the discussion. We need to talk to the people doing the work, diving into what is producing the results, exploring what we must change to more effectively achieve the goals we have established.

The other problem is that a single metric rarely provides the entire picture of what’s really happening. A single activity metric tracks that activity, perhaps number of outbound calls per day. By itself, it doesn’t explain why you are failing to build a healthy pipeline. Looking at a collection of related metrics starts to give you better insight, then helps you dive into the collaborative diagnoses and problem solving with the people doing the work.

A single metric may be good, but often can drive the wrong focus. For example, hitting a “number of meetings goal,” never causes you to ask, “What if we could get things done in fewer meetings?” I’ve told this story before. We have a relatively long complex buying/sales cycle. Years ago, it took us roughly 22 “meetings” to close. Had we been focused on a number of meetings goal, we would never have changed that. We would have looked at how many more 22 meeting cycles could we start? Instead, we set the goal of reducing the number of meetings to close. We reduced it to roughly 9. We more than doubled our productivity, doubled our revenue potential. More importantly, we were using our time and our prospects’ time much more effectively–driving higher levels of trust and much higher win rates. The meetings metric would never have caused us to ask ourselves that question.

Finally, there is the factor of metric/KPI overload and fatigue. We overwhelm our teams and ourselves with so many metrics, we simply don’t know where to focus, what’s most important. As a result, all the metrics cease to have meaning or impact.

What do we do?

- Find the critical few metrics that count. They may be different for differing parts of the organization, different roles, but find the critical few that are critical for each. Pragmatically, I can’t imagine needing many more than 5 for most selling and GTM roles.

- Make sure your metrics are focused on outcomes. We don’t care about the work that needs to be done (some caveats around this), but we care that the work produces the desired outcome.

- No metric is forever. The metrics that are important to you today, may be less important tomorrow–because we have different problems and challenges.

- Never add a metric without first eliminating two others.

The real issue underlying 1-4 is not what metrics should we track? It’s what problems are we trying to solve and how do we know we are solving them?

As to the 21 metrics for coaching success. I’ve never used more than about 4 and a couple have nothing to do with coaching, but they help me understand if the coaching is producing the outcomes needed.

Afterword: Here is another outstanding AI generated discussion. Enjoy!

Which 4 metrics do you follow? Also, have you thought about writing a survival guide/book for Chief Growth Officers/Sales Executives who manage managers rather than front line sales directors? I love your Sales Manager Survival Guide–have read it a few times. Thanks!

David, thanks for the thoughtful response. Some quick answers:

1. The four metrics I follow tend to vary with the person and what I am trying to achieve with the person. But in a sales manager role, I tend to look at: 1. Am I spending at least one hour/week with each person? What we do in each hour may vary, but there is deep coaching involved in each. 2. Is the person getting value from that coaching based on the behavioral changes I’m coaching? There may be a couple of others. I am already tracking a few key performance metrics, like YTD goal attainment–but those are not “coaching specific” metrics. They are key, over the long term, to see if the person is improving.

So when I say I track about 4 coaching metrics, I’m cheating a little, because there are a couple of non-coaching metrics that also inform the impact of my coaching.

I’m so flattered by your comments about SMSG, thank you. I am 5 years behind schedule in publishing the Sales Executive Survival Guide. I’ve been 85% done for all that time, but that 85% changes each rewrite. Right now, the big thing on my mind is, “Is a book the most powerful format.” A few of my author friends and I revising our thinking. I hope to come out with something in the next year, I just don’t know what.

Thanks again!